Introduction

In the first quarter of 2024, APWG reported an average of 300.000 unique monthly phishing attacks. Besides that, Microsoft Digital Defense Report also demonstrated that the problem is caused by the low-cost barrier in the phishing market due to the commercialization of phishing kits, which are code repositories that are created to mimic benign websites while redirecting information typed on the credentials fields to a centralized server, to be sold in the dark market later. Those phishing kits are made to be easily deployed by attackers at scale.

Besides all the efforts to classify phishing pages based on visual differences between malicious websites and their benign counterpart, we have a low study on the distribution of phishing kits and how they are deployed over time. One recent study tackled that by identifying a handful of phishing kits and manually identifying fingerprintable information in them, which later was used to identify which websites correspond to which phishing kits in the wild. Due to the phishing kits analyzed in this study being ones that are freely distributed in telegram channels, we argue that this approach only captures simple phishing samples, which might not be responsible for the majority of effective phishing attacks.

We managed to improve that by creating a mechanism that will identify phishing kits on a large scale by measuring their behavior at crawling time. That will enable the security analyst to group URLs that run the same code and consequently are deployed from the same phishing kit.

For that, we create a tool that completely bypasses code obfuscation, regardless of different obfuscations. And also proposes a spread view of the phishing landscape by analyzing crawler data over a long period of time (TODO), which besides understanding common practices and the duration period of phishing pages, will enable the analyst to see the evolutions of phishing pages, and hopefully assign different phishing pages to the same possible authors.

Finally, the tool also enables the analyst to verify which samples were never seen before in terms of client-side behavior. That will save valuable human resources as they receive thousands of phishing websites to be reviewed daily, and automatically filtering out the ones that were already seen decreases the amount of human wasted time.

Crawler

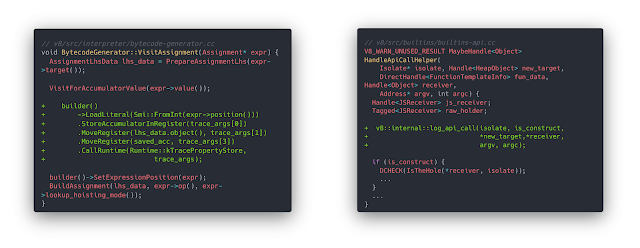

The most important part of the crawler is the browser. We used an instrumented version of Chromium with slight modifications to the bytecode generation pipeline and runtime function calling, to identify javascript property accesses and function calls. That browser allows us to see exactly which operations were executed by each website at runtime in a way that is impossible to identify from the website itself (which is the main advantage over the regular CDP approach). Our modifications follow previous studies that use the same technique on older versions of the browser.

To log function calling, we hook the runtime function calling method used inside Chromium. To log property access information we had to instrument the bytecode generation process and create an extra bytecode that calls a logging function whenever a property is loaded or stored with its information. We then moved this instrumented compiled version of Chromium to a docker container to make it scalable.

The log format created by the browser has the following characteristics. For each process created from the website that is being visited, one log file is created on the disk with the sequential instructions executed by the browser. Besides the instructions, the log file also contains information on the source code that was loaded before the instructions were executed. Also, it is possible to attribute the execution of each instruction to one specific script, loaded from a specific origin inside the V8 running context.

One of the key problems we have with phishing websites is that often phishing kits are obfuscated and sometimes with different tools. With that approach, we completely bypass obfuscation and even though the source code might look different due to different obfuscation techniques, the browser will see the exact same sequence of instructions being executed.

Besides, another problem phishing crawlers often have to handle is regarding cloaking, which is something we don't have to tackle here for a very nice reason. We want to cluster together websites that behave in the same way, which means that even though our browser is redirected due to cloaking, we still would be able to cluster together the websites that use cloaking the same way. In the end, we argue that it is enough information to identify phishing pages deployed from the same phishing kit (TODO).

In general, the full crawler works as follows. We have a module Trigger that runs twice a day, at linearly random times, which is important to avoid being cloaked by those platforms that do not support crawling. When it runs, it calls three other modules sequentially. First, it runs the Downloader modules, that try to access public-access sources of phishing URLs that are reported and validated by security analysts (those are PhishTank, OpenPhish, and PhishStats). After accessing the new URLs it then checks against a database of previously crawled URLs to avoid crawling the URL again. If there are any new URLs, the trigger runs the Analyzer module on those new URLs, which consists of running the Instrumented Browser on the URL for 10s, then a tool called Resources Saver to save the public resources of the page (such as images, icons, source code) and then KitPhishR, that is a tool that seeks phishing kits stored in the deployed phishing server. The browser is executed in a docker container that is created to analyze batches of 10 URLs, which is then destroyed to be replaced by another docker instance to analyze more samples.

Information on the resources and the phishing kits (obtained with KitPhishR) are not used in the processing pipeline. They will be used in a validation phase to verify if the phishing URLs assigned to the same cluster are indeed from the same phishing kit.

After the analysis is completed, we store the results of each URL in a separate directory that is named after the hash of the domain that was crawled, therefore using that to make sure that the same domain is not crawled twice.

After the tool finishes to analyse the URLs, due to storage limitations in the server we have to upload all the sample results to the Google Drive, which stores all the samples separated by source, day and hour that was crawled.

Projector

With the logs in hand, we can run the projector once a day. This tool will verify for each day, which ones are similar to another one that has been seen before, and which one seems to be new.

Since we can't keep all the data on disk at the same time, the projector downloads the samples one day at a time, uses the Log Parser module to transform the log information of each sample into an Intermediary Representation of the logs, and then adds it to the dataset using the Dataset Parser module.

The Log Parser handles the logs by considering that each sample contains javascript code from n different sources during execution. Because of that, it breaks the single sequence of instructions produced by the browser into n sequences of instructions separated by source. We call each of those broken sequences of instructions, an Instruction Block, which has a unique URL associated with it.

We do that because our main goal is not to identify the exact same behavior between phishing websites, but to identify common patterns in the behavior. With that division, it is easier to identify similar files between phishing websites, which can be used to understand the evolution of a phishing kit. In the end, that is why calculating instruction block, is better than handling a single thread of logs.

After all the data is gathered in the Dataset, we transform the text-based representation of the logs into an embedding representation to use regular clustering techniques on top of that. The Dataset Embedding module was created to be very adaptable and can be used with DOC2VEC or SBERT techniques. In our experiments, SBERT produces less noise on the embeddings and creates embeddings that are closer to similar samples. Besides, SBERT has an attention layer that produces an importance window that is larger than the DOC2VEC approach, apart from being faster because it is based on a pre-trained model. In the end, the Dataset Embedding module embeds each Instruction Block creating a variable amount of embeddings for each sample. One can think of the result as a matrix with shape nxF, with n being the variable number of Instruction Blocks of each sample and F the number of features in the embedding technique, which is the same for all the samples.

In the Clusterizer Module, each sample is represented by a matrix with a different number of lines, which makes it not trivial to compare the samples. In the end, we want to calculate a Representative Vector for each sample, which should be decided based on the group of embeddings calculated for that sample and then used to compare the samples among themselves. To solve that, the module was also built to be very generic, which also enabled us to try different approaches. The first one we tested was just based on the average of the lines in the matrix, which ended up creating more noise than information. Then we tried finding the most important line in the whole matrix, which would represent the file that best describes the sample, which ended up evidencing JavaScript libraries like jQuery, Bootstrap, and Popper.

Finally, we realized that, even though the number of lines was not the same in the matrix, we could perform a matrix multiplication with the transpose of the sample matrix with itself to end up with an FxF matrix for each sample, which is something that would allow us to compare the samples. The problem is that using the SBERT model, we create embeddings with 1024 dimensions, which would then create a resulting matrix with more than 1 million features for each sample. We solve that by calculating an Incremental PCA with 256 components and a batch size of 256, which was preferred due to memory limitations. Which ends up with Representative Vectors of size 256 for each sample in the dataset.

That approach calculates way more accurate clusters for similar execution traces and more or less the same number of instruction blocks. However, bigger changes in the number of instruction blocks still affect the clustering mechanisms which is reasonable based on the matrix multiplication alternative. We are still to find an alternative that completely disregards the number of instruction blocks in a sample.

Finally, the Clusterizer module uses the embeddings as the input data and accepts many different clustering algorithms, such as DBSCAN, OPTICS, and HDBSCAN. Our initial tests were made using the DBSCAN algorithm, but the final pipeline will be chosen by their performance.

All that composes the Projector module, which runs every day in parallel with the crawler. While the crawler runs every day to obtain daily new samples, the projector runs every day to use those new samples. It basically uses the samples from the days before today to build the embedding space and then uses the new samples to project them into the previously known clusters to verify which samples from today are new, and which are similar to any that were seen before. That basically allows the security analyst to understand which samples it has to manually inspect, and which are not worth inspecting.

Besides, that tool also allows us to see how the phishing kits progress over time and how long does one phishing kit remains being deployed in the wild. Also, that tool can be used to blacklist samples that have the same behavior as the ones that were already validated before.

Initial Results

Malicious Clustering Subsample Experiment

To verify if the algorithm can correctly classify different phishing samples into the same clustering, we used 249 valid samples to see the resulting clustering using their behavior. To validate the clustering we created a tool to manually inspect each cluster regarding the code that was executed, the number of blocks in each of them, and the domains requested.

Considering the clusters that had more than 1 sample in each of them, we saw mostly clusters that correctly grouped different samples with the same behavior together, which represents that the pipeline might be doing good.

Even though we had some clusters with the exact same samples, which might have been due to redirection, or failing to filter equal samples. In the end, we got interesting clusters containing slightly different execution blocks or domains, but with enough information to make us believe that the overall code structure was developed by the same author.

Reliability of the Crawler

To test if the exact same URL is equally visited by the browser and correctly assigned to the same clusters, we propose the following experiment

We selected the 10 most accessed URLs from the Alexa Top 100 index and used our crawler to visit each of them 10 times sequentially. Our hypothesis is that the clustering will result in 10 clusters with the samples in the same ones.

List of URLs:

- https://www.google.com/

- https://www.facebook.com/

- https://www.youtube.com/

- https://www.wikipedia.org/

- https://www.amazon.com.br/

- https://www.instagram.com/

- https://www.linkedin.com/

- https://www.reddit.com/

- https://www.whatsapp.com/

- https://openai.com/

In the end, WhatsApp, Instagram, OpenAI, Wikipedia, YouTube, and Facebook were 100% correctly grouped in the same cluster. However, Google and Linkedin were 90% correctly clustered. The samples that were clustered outside had fewer instruction blocks in it, meaning that assets were not successfully loaded during the 10s crawling or the browser was evaded. Amazon was grouped in 3 different clusters. With 10, 11-12, and 7 instruction blocks in each. While Reddit was not able to be parsed by the crawler.

After manually analyzing the sample embeddings by projecting the embeddings in a 3D space, we see that even though the samples were not assigned to the same cluster, they were not far from each other, which means that a small adjustment to the clustering algorithm would group them together. However, we would have to verify if the adjustment would not make the pipeline more prone to group different samples in the same cluster, which is equally bad.

Overall. It means that the processing tool still needs some tweaking regarding the embedding technique and clustering parameters before deploying it.

Effectiveness of the Projector

Even though it is a preliminary experiment. We run the projector on a rather small sample set from September 25th to September 29th, with September 29th as the target date to be mapped.

Overall, we used 7882 samples of old data and 1407 samples of new data. From those new points, we had 471 of them assigned to a cluster, from which 409 of them were assigned to clusters that contained old points, meaning a repeated phishing kit deployment. While we still need some tweaking on the clustering algorithm to cluster all new samples into a cluster, we identified that 86% of the phishing URLs discovered in a day were not new, but redeployment of an already known phishing kit.

Next Steps

Another experiment we would like to make is regarding progressive log files. This means understanding how the sample is located in the projection space while it is still loading. This is an experiment we still have to implement to see the results but would allow us to implement an instrumented version of Chromium that uses this approach as a blacklist for known phishing kits.

We still have a crawler that is constantly obtaining new samples which will give us insight on the distribution of phishing kits through time. However, it is something that will have to leave it running for a while before we have any conclusions about it.

Besides, we are also considering using the dynamic behavior of phishing kits to create a classification tool that will differentiate benign and malicious websites based on that behavior.